We underfund our heroes, don’t we?

(Also that monitors model name in the thumbnail “UHD 4K 2K” :D

yet another reason to back flatpaks and distro-agnostic software packaging. We cant afford to use dozens of build systems to maintain dozens of functionally-identical application repositories

I will back flatpaks when they stop feeling so sandboxed. I understand that is the point, but on Mint it don’t get any specific popuo requests and instead have to sit in flatseal and manually give access. .deb 4 lyfe

the sandbox is the point! but yes there’s still shortcomings with the sandbox/portal implementation, but if snaps can find a way to improve the end user experience despite containerising (most) apps, then so can flatpak.

It’s similar to how we’re at that awkward cusp of Wayland being the one and only display protocol for Linux, but we’re still living with the awkward pitfalls and caveats that come with managing such a wide-ranging FOSS project.

I am definitely not against flatpaks, but I still use system packages when possible. I find too many weird issues with flatpaks when I need them to do more than be a standalone application (see Steam). Flatpaks feel like a walled garden more than a sandbox. Just give me more UI prompts for what you want to access as opposed to needing an entirely separate program (Flatseal).

We have this guy saying we cannot build all the Alpine packages once to share with all Alpine users. Unsustainable!

On the other hand, we have the Gentoo crowd advocating for rebuilding everything from source for every single machine.

In the middle, we have CachyOS building the same x86-64 packages multiple times for machines with tiny differences in the CPU flags they support.

The problem is distribution more than building anyway I would think. You could probably create enough infrastructure to support building Alpine for everybody on the free tier of Oracle Cloud. But you are not going to have enough bandwidth for everybody to download it from there.

But Flatpak does not solve the bandwidth problem any better (it just moves the problem to somebody else).

Then again, there are probably more Apline bits being downloaded from Docker Hub than anywhere else.

Even though I was joking above, I kind of mean it. The article says they have two CI/CD “servers” and one dev box. This is 2025. Those can all be containers or virtual machines. I am not even joking that the free tier of Oracle Cloud ( or wherever ) would do it. To quote the web, “you can run a 4-core, 24GB machine with a 200GB disk 24/7 and it should not cost you anything. Or you can split those limits into 2 or 4 machines if you want.”

For distribution, why not Torrent? Look for somebody to provide “high-performance” servers for downloads I guess but, in the meantime, you really do not need any infrastructure these days just to distribute things like ISO images to people.

There are other costs, too. Someone has to spend a LOT of time maintaining their repos: testing and reviewing each package, responding to bugs caused by each packaging format’s choice of dependencies, and doing this for multiple branches of supported distro version! Thats a lot of man hours that could still be used for app distribution, but combined could help make even more robust and secure applications than before.

And, if we’re honest, except for a few outliers like Nix, Gentoo, and a few others, there’s little functional difference to each package format, which simply came to exist to fill the same need before Linux was big enough to establish a “standard”.

Aaaanyway

I do think we could have package formats leveraging torrenting more though. It could make updates a bit harder to distribute quickly in theory but nothing fundamentally out of the realm of possibilities. Many distros even use torrents as their primary form of ISO distribution.

This is such a superficial take.

Flatpaks have their use-case. Alpine has its use-case as a small footprint distro, focused on security. Using flatpaks would nuke that ethos.

Furthermore, they need those servers to build their core and base system packages. There is no distro out there that uses flatpaks or appimages for their CORE.

Any distro needs to build their toolchain, libs and core. Flatpaks are irrelevant to this discussion.

At the risk of repteating myself, flatpaks are irrelevant to Alpine because its a small footprint distro, used alot in container base images, containers use their own packaging!

Furthermore, flatpaks are literal bloat, compared to alpines’ apk packages which focus on security and minimalism.

Edit: Flatpak literally uses alpine to build its packages. No alpine, no flatpaks. Period

Flatpaks have their use. This is not that. Check your ignorance.

/s This was a Snap trap and you walked right into it!! You are right, Flatpaks are great bit you cannot use them for everything. We all need to switch to Snaps so we can build our base packages in them!

Thank you Canonical I love your proprietary packaging. 👏🏿👏🏿👏🏿

I know there’s limitations to flatpak and other agnostic app bundling systems but there’s simply far too many resources invested into repacking the same applications across each distro. These costs wouldnt be so bad if more resources were pooled behind a single repository and build system.

As for using flatpaks at the core of a distro, we know from snaps that it is possible to distribute core OS components/updates via a containerised package format. As far as I know there is no fundamental design flaw that makes flatpak incapable of doing so, rather than the fact it lacks the will of distro maintainers to develop the features in flatpak necessary to support it.

That being said, it’s far from my point. Even if Alpine, Fedora, Ubuntu, SUSE etc. all used their native package formats for core OS features and utilities, they could all stand to save a LOT in the costs of maintaining superfluous (and often buggy and outdated) software by deferring to flatpak where possible.

There needs to be a final push to flatpak adoption the same way we hovered between wayland and xorg for years until we decided that Wayland was most beneficial to the future of Linux. Of course, this meant addressing the flaws of the project, and fixing a LOT of broken functionality, but we’re not closer than ever to dropping xorg.

I’m a fan of flatpaks, so this isn’t to negate your argument. Just pointing out that Flathub is also using Equinix.

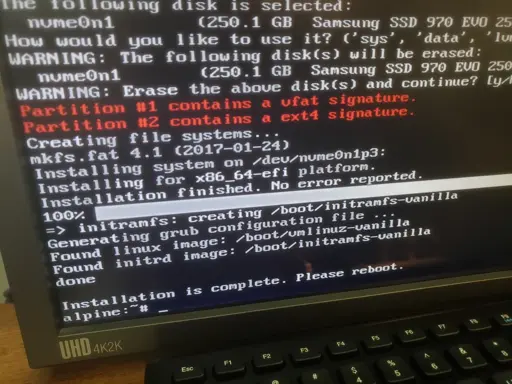

Interlude: Equinix Metal née Packet has been sponsoring our heavy-lifting servers doing actual building for the past 5 years. Unfortunately, they are shutting down, meaning we need to move out by the end of April 2025.

Pretty sure flatpak uses alpine as a bootstrap… Flatpak, after all, brings along an entire distro to run an app.

I don’t think it’s a solution for this, it would just mean maintaining many distro-agnostic repos. Forks and alternatives always thrive in the FOSS world.

Let the community package it to deb,rpm etc while the devs focus on flatpak/appimage

Torrent?

That solves the media distribution related storage issue, but not the CI/CD pipeline infra issue.

First FreeDesktop and now Alpine damm

Dang, oof

It’s not too bad tho, we’ve already replaced this with Github actions: https://github.com/NixOS/nixpkgs/pull/356023

Equinix seems to be shutting down their bare metal service in it’s entirety. All projects using it will be affected.

How are they so small and underfunded? My hobby home servers and internet connection satisfy their simple requirements

800TB of bandwidth per month?

That’s ~2.4Gbit/s. There are multiple residential ISPs in my area offering 10Gbit/s up for around $40/month, so even if we assume the bandwidth is significantly oversubscribed a single cheap residential internet plan should be able to handle that bandwidth no problem (let alone a for a datacenter setup which probably has 100Gbit/s links or faster)

If you do 800TB in a month on any residential service you’re getting fair use policy’ed before the first day is over, sadly.

With my IISP, the base package comes with 4 TB of bandwidth and I pay and extra $20 a month for “unlimited”.

I am not sure of “unlimited” has a limit. It may. It is not in the small print though. I may just be rate limited ( 3 Gpbs ).

That averages out to around 300 megabytes per second. No way anyone has that at home comercially.

One of the best comercial fiber connections i ever saw will provide 50 megabytes per second upload, best effort that is.

No way in hell you can satisfy that bandwidth requirement at home. Lets not mention that they need 3 nodes with such bw.

I have 3 Gbps home Internet ( up and down ). I get over 300 Megabytes per second.

Can they not torrent a bunch of that bandwidth?

You’re completely missing what he’s saying, and how that number is calculated. It’s an average connection speed over time and you’re anecdotally saying your internet is superior because you have a higher connection speed, which isn’t really true at all.

You have residential internet which is able to provide 3Gbps intermittently. You may even be able to sustain those speeds for several days at a time. But servers maintain those connections for months and years at a time…

800TB/mo is 2.469 Gb/s sustained for 30 days. They may be on a 10Gb/s connection, but that doesn’t mean they have enough demand to saturate it 100% of the time.

Yeah, thats almost 150% more than my (theoretical) bandwidth at home (Gbps but I live alone & just don’t want to pay much), and that is just assuming constant workload (peaks must be massive).

This is indeed considerate, yet hopefully solvable. It certainly is from the link perspective.

50MB/s is like 0.4Gbit/s. Idk where you are, but in Switzerland you can get a symmetric 10Gbit/s fiber link for like 40 bucks a month as a residential customer. Considering 100Gbit/s and even 400Gbit/s links are already widely deployed in datacenter environments, 300MB/s (or 2.4Gbit/s) could easily be handled even by a single machine (especially since the workload basically consists of serving static files).

So I have to move to Swiss then, got it.

I think anywhere outside the US or Australia will do.

Probably not one person, but that could be distributed.

Like folding at home :D

On my current internet plan I can move about 130TB/month and that’s sufficent for me, but I could upgrade plan to satisfy the requirement

Your home server might have the required bandwidth but not requisite the infra to support server load (hundreds of parallel connections/downloads).

Bandwidth is only one aspect of the problem.

Ten gig fibre for internal networking, enterprise SFP+ network hardware, big meaty 72 TB FreeBSD ZFS file server with plenty of cache, backup power supply and UPS

The tech they require really isn’t expensive anymore